Summarize Text

This tutorial demonstrates text summarization using built-in chains and LangGraph.

See here for a previous version of this page, which showcased the legacy chain RefineDocumentsChain.

Suppose you have a set of documents (PDFs, Notion pages, customer questions, etc.) and you want to summarize the content.

LLMs are a great tool for this given their proficiency in understanding and synthesizing text.

In the context of retrieval-augmented generation, summarizing text can help distill the information in a large number of retrieved documents to provide context for a LLM.

In this walkthrough we’ll go over how to summarize content from multiple documents using LLMs.

Concepts

Concepts we will cover are:

Using language models.

Using document loaders, specifically the CheerioWebBaseLoader to load content from an HTML webpage.

Two ways to summarize or otherwise combine documents.

- Stuff, which simply concatenates documents into a prompt;

- Map-reduce, for larger sets of documents. This splits documents into batches, summarizes those, and then summarizes the summaries.

Setup

Jupyter Notebook

This and other tutorials are perhaps most conveniently run in a Jupyter notebooks. Going through guides in an interactive environment is a great way to better understand them. See here for instructions on how to install.

Installation

To install LangChain run:

bash npm2yarn npm i langchain @langchain/core

For more details, see our Installation guide.

LangSmith

Many of the applications you build with LangChain will contain multiple steps with multiple invocations of LLM calls. As these applications get more and more complex, it becomes crucial to be able to inspect what exactly is going on inside your chain or agent. The best way to do this is with LangSmith.

After you sign up at the link above, make sure to set your environment variables to start logging traces:

export LANGSMITH_TRACING="true"

export LANGSMITH_API_KEY="..."

# Reduce tracing latency if you are not in a serverless environment

# export LANGCHAIN_CALLBACKS_BACKGROUND=true

Overview

A central question for building a summarizer is how to pass your documents into the LLM’s context window. Two common approaches for this are:

Stuff: Simply “stuff” all your documents into a single prompt. This is the simplest approach.Map-reduce: Summarize each document on its own in a “map” step and then “reduce” the summaries into a final summary.

Note that map-reduce is especially effective when understanding of a sub-document does not rely on preceding context. For example, when summarizing a corpus of many, shorter documents. In other cases, such as summarizing a novel or body of text with an inherent sequence, iterative refinement may be more effective.

First we load in our documents. We will use WebBaseLoader to load a blog post:

import "cheerio";

import { CheerioWebBaseLoader } from "@langchain/community/document_loaders/web/cheerio";

const pTagSelector = "p";

const cheerioLoader = new CheerioWebBaseLoader(

"https://lilianweng.github.io/posts/2023-06-23-agent/",

{

selector: pTagSelector,

}

);

const docs = await cheerioLoader.load();

Let’s next select a chat model:

Pick your chat model:

- Groq

- OpenAI

- Anthropic

- Google Gemini

- FireworksAI

- MistralAI

- VertexAI

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/groq

yarn add @langchain/groq

pnpm add @langchain/groq

Add environment variables

GROQ_API_KEY=your-api-key

Instantiate the model

import { ChatGroq } from "@langchain/groq";

const llm = new ChatGroq({

model: "llama-3.3-70b-versatile",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/openai

yarn add @langchain/openai

pnpm add @langchain/openai

Add environment variables

OPENAI_API_KEY=your-api-key

Instantiate the model

import { ChatOpenAI } from "@langchain/openai";

const llm = new ChatOpenAI({

model: "gpt-4o-mini",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/anthropic

yarn add @langchain/anthropic

pnpm add @langchain/anthropic

Add environment variables

ANTHROPIC_API_KEY=your-api-key

Instantiate the model

import { ChatAnthropic } from "@langchain/anthropic";

const llm = new ChatAnthropic({

model: "claude-3-5-sonnet-20240620",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/google-genai

yarn add @langchain/google-genai

pnpm add @langchain/google-genai

Add environment variables

GOOGLE_API_KEY=your-api-key

Instantiate the model

import { ChatGoogleGenerativeAI } from "@langchain/google-genai";

const llm = new ChatGoogleGenerativeAI({

model: "gemini-2.0-flash",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/community

yarn add @langchain/community

pnpm add @langchain/community

Add environment variables

FIREWORKS_API_KEY=your-api-key

Instantiate the model

import { ChatFireworks } from "@langchain/community/chat_models/fireworks";

const llm = new ChatFireworks({

model: "accounts/fireworks/models/llama-v3p1-70b-instruct",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/mistralai

yarn add @langchain/mistralai

pnpm add @langchain/mistralai

Add environment variables

MISTRAL_API_KEY=your-api-key

Instantiate the model

import { ChatMistralAI } from "@langchain/mistralai";

const llm = new ChatMistralAI({

model: "mistral-large-latest",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/google-vertexai

yarn add @langchain/google-vertexai

pnpm add @langchain/google-vertexai

Add environment variables

GOOGLE_APPLICATION_CREDENTIALS=credentials.json

Instantiate the model

import { ChatVertexAI } from "@langchain/google-vertexai";

const llm = new ChatVertexAI({

model: "gemini-1.5-flash",

temperature: 0

});

Stuff: summarize in a single LLM call

We can use createStuffDocumentsChain, especially if using larger context window models such as:

- 128k token OpenAI

gpt-4o - 200k token Anthropic

claude-3-5-sonnet-20240620

The chain will take a list of documents, insert them all into a prompt, and pass that prompt to an LLM:

import { createStuffDocumentsChain } from "langchain/chains/combine_documents";

import { StringOutputParser } from "@langchain/core/output_parsers";

import { PromptTemplate } from "@langchain/core/prompts";

// Define prompt

const prompt = PromptTemplate.fromTemplate(

"Summarize the main themes in these retrieved docs: {context}"

);

// Instantiate

const chain = await createStuffDocumentsChain({

llm: llm,

outputParser: new StringOutputParser(),

prompt,

});

// Invoke

const result = await chain.invoke({ context: docs });

console.log(result);

The retrieved documents discuss the development and capabilities of autonomous agents powered by large language models (LLMs). Here are the main themes:

1. **LLM as a Core Controller**: LLMs are positioned as the central intelligence in autonomous agent systems, capable of performing complex tasks beyond simple text generation. They can be framed as general problem solvers, with various implementations like AutoGPT, GPT-Engineer, and BabyAGI serving as proof-of-concept demonstrations.

2. **Task Decomposition and Planning**: Effective task management is crucial for LLMs. Techniques like Chain of Thought (CoT) and Tree of Thoughts (ToT) are highlighted for breaking down complex tasks into manageable steps. CoT encourages step-by-step reasoning, while ToT explores multiple reasoning paths, enhancing the agent's problem-solving capabilities.

3. **Integration of External Tools**: The use of external tools significantly enhances LLM capabilities. Frameworks like MRKL and Toolformer allow LLMs to interact with various APIs and tools, improving their performance in specific tasks. This modular approach enables LLMs to route inquiries to specialized modules, combining neural and symbolic reasoning.

4. **Self-Reflection and Learning**: Self-reflection mechanisms are essential for agents to learn from past actions and improve over time. Approaches like ReAct and Reflexion integrate reasoning with action, allowing agents to evaluate their performance and adjust strategies based on feedback.

5. **Memory and Context Management**: The documents discuss different types of memory (sensory, short-term, long-term) and their relevance to LLMs. The challenge of finite context length in LLMs is emphasized, as it limits the ability to retain and utilize historical information effectively. Techniques like external memory storage and vector databases are suggested to mitigate these limitations.

6. **Challenges and Limitations**: Several challenges are identified, including the reliability of natural language interfaces, difficulties in long-term planning, and the need for robust task decomposition. The documents note that LLMs may struggle with unexpected errors and formatting issues, which can hinder their performance in real-world applications.

7. **Emerging Applications**: The potential applications of LLM-powered agents are explored, including scientific discovery, autonomous design, and interactive simulations (e.g., generative agents mimicking human behavior). These applications demonstrate the versatility and innovative possibilities of LLMs in various domains.

Overall, the documents present a comprehensive overview of the current state of LLM-powered autonomous agents, highlighting their capabilities, methodologies, and the challenges they face in practical implementations.

Streaming

Note that we can also stream the result token-by-token:

const stream = await chain.stream({ context: docs });

for await (const token of stream) {

process.stdout.write(token + "|");

}

|The| retrieved| documents| discuss| the| development| and| capabilities| of| autonomous| agents| powered| by| large| language| models| (|LL|Ms|).| Here| are| the| main| themes|:

|1|.| **|LL|M| as| a| Core| Controller|**|:| L|LM|s| are| positioned| as| the| central| intelligence| in| autonomous| agent| systems|,| capable| of| performing| complex| tasks| beyond| simple| text| generation|.| They| can| be| framed| as| general| problem| sol|vers|,| with| various| implementations| like| Auto|GPT|,| GPT|-|Engineer|,| and| Baby|AG|I| serving| as| proof|-of|-con|cept| demonstrations|.

|2|.| **|Task| De|composition| and| Planning|**|:| Effective| task| management| is| crucial| for| L|LM|s| to| handle| complicated| tasks|.| Techniques| like| Chain| of| Thought| (|Co|T|)| and| Tree| of| Thoughts| (|To|T|)| are| highlighted| for| breaking| down| tasks| into| manageable| steps| and| exploring| multiple| reasoning| paths|.| Additionally|,| L|LM|+|P| integrates| classical| planning| methods| to| enhance| long|-term| planning| capabilities|.

|3|.| **|Self|-|Reflection| and| Learning|**|:| Self|-ref|lection| mechanisms| are| essential| for| agents| to| learn| from| past| actions| and| improve| their| decision|-making| processes|.| Framework|s| like| Re|Act| and| Reflex|ion| incorporate| dynamic| memory| and| self|-ref|lection| to| refine| reasoning| skills| and| enhance| performance| through| iterative| learning|.

|4|.| **|Tool| Util|ization|**|:| The| integration| of| external| tools| significantly| extends| the| capabilities| of| L|LM|s|.| Appro|aches| like| MR|KL| and| Tool|former| demonstrate| how| L|LM|s| can| be| augmented| with| various| APIs| to| perform| specialized| tasks|,| enhancing| their| functionality| in| real|-world| applications|.

|5|.| **|Memory| and| Context| Management|**|:| The| documents| discuss| different| types| of| memory| (|sens|ory|,| short|-term|,| long|-term|)| and| their| relevance| to| L|LM|s|.| The| challenge| of| finite| context| length| is| emphasized|,| as| it| limits| the| model|'s| ability| to| retain| and| utilize| historical| information| effectively|.| Techniques| like| vector| stores| and| approximate| nearest| neighbors| (|ANN|)| are| suggested| to| improve| retrieval| speed| and| memory| management|.

|6|.| **|Challenges| and| Limit|ations|**|:| Several| limitations| of| current| L|LM|-powered| agents| are| identified|,| including| issues| with| the| reliability| of| natural| language| interfaces|,| difficulties| in| long|-term| planning|,| and| the| need| for| improved| efficiency| in| task| execution|.| The| documents| also| highlight| the| importance| of| human| feedback| in| refining| model| outputs| and| addressing| potential| biases|.

|7|.| **|Emer|ging| Applications|**|:| The| potential| applications| of| L|LM|-powered| agents| are| explored|,| including| scientific| discovery|,| autonomous| design|,| and| interactive| simulations| (|e|.g|.,| gener|ative| agents|).| These| applications| showcase| the| versatility| of| L|LM|s| in| various| domains|,| from| drug| discovery| to| social| behavior| simulations|.

|Overall|,| the| documents| present| a| comprehensive| overview| of| the| current| state| of| L|LM|-powered| autonomous| agents|,| their| capabilities|,| methodologies| for| improvement|,| and| the| challenges| they| face| in| practical| applications|.|||

Go deeper

- You can easily customize the prompt.

- You can easily try different LLMs, (e.g.,

Claude) via the

llmparameter.

Map-Reduce: summarize long texts via parallelization

Let’s unpack the map reduce approach. For this, we’ll first map each document to an individual summary using an LLM. Then we’ll reduce or consolidate those summaries into a single global summary.

Note that the map step is typically parallelized over the input documents.

LangGraph, built on top of @langchain/core, supports map-reduce workflows and is well-suited to this problem:

- LangGraph allows for individual steps (such as successive summarizations) to be streamed, allowing for greater control of execution;

- LangGraph’s checkpointing supports error recovery, extending with human-in-the-loop workflows, and easier incorporation into conversational applications.

- The LangGraph implementation is straightforward to modify and extend, as we will see below.

Map

Let’s first define the prompt associated with the map step. We can use

the same summarization prompt as in the stuff approach, above:

import { ChatPromptTemplate } from "@langchain/core/prompts";

const mapPrompt = ChatPromptTemplate.fromMessages([

["user", "Write a concise summary of the following: \n\n{context}"],

]);

We can also use the Prompt Hub to store and fetch prompts.

This will work with your LangSmith API key.

For example, see the map prompt here.

import { pull } from "langchain/hub";

import { ChatPromptTemplate } from "@langchain/core/prompts";

const mapPrompt = (await pull) < ChatPromptTemplate > "rlm/map-prompt";

Reduce

We also define a prompt that takes the document mapping results and reduces them into a single output.

// Also available via the hub at `rlm/reduce-prompt`

let reduceTemplate = `

The following is a set of summaries:

{docs}

Take these and distill it into a final, consolidated summary

of the main themes.

`;

const reducePrompt = ChatPromptTemplate.fromMessages([

["user", reduceTemplate],

]);

Orchestration via LangGraph

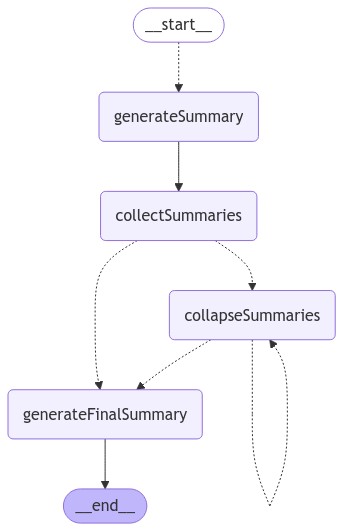

Below we implement a simple application that maps the summarization step on a list of documents, then reduces them using the above prompts.

Map-reduce flows are particularly useful when texts are long compared to the context window of a LLM. For long texts, we need a mechanism that ensures that the context to be summarized in the reduce step does not exceed a model’s context window size. Here we implement a recursive “collapsing” of the summaries: the inputs are partitioned based on a token limit, and summaries are generated of the partitions. This step is repeated until the total length of the summaries is within a desired limit, allowing for the summarization of arbitrary-length text.

First we chunk the blog post into smaller “sub documents” to be mapped:

import { TokenTextSplitter } from "@langchain/textsplitters";

const textSplitter = new TokenTextSplitter({

chunkSize: 1000,

chunkOverlap: 0,

});

const splitDocs = await textSplitter.splitDocuments(docs);

console.log(`Generated ${splitDocs.length} documents.`);

Generated 6 documents.

Next, we define our graph. Note that we define an artificially low maximum token length of 1,000 tokens to illustrate the “collapsing” step.

import {

collapseDocs,

splitListOfDocs,

} from "langchain/chains/combine_documents/reduce";

import { Document } from "@langchain/core/documents";

import { StateGraph, Annotation, Send } from "@langchain/langgraph";

let tokenMax = 1000;

async function lengthFunction(documents) {

const tokenCounts = await Promise.all(

documents.map(async (doc) => {

return llm.getNumTokens(doc.pageContent);

})

);

return tokenCounts.reduce((sum, count) => sum + count, 0);

}

const OverallState = Annotation.Root({

contents: Annotation<string[]>,

// Notice here we pass a reducer function.

// This is because we want combine all the summaries we generate

// from individual nodes back into one list. - this is essentially

// the "reduce" part

summaries: Annotation<string[]>({

reducer: (state, update) => state.concat(update),

}),

collapsedSummaries: Annotation<Document[]>,

finalSummary: Annotation<string>,

});

// This will be the state of the node that we will "map" all

// documents to in order to generate summaries

interface SummaryState {

content: string;

}

// Here we generate a summary, given a document

const generateSummary = async (

state: SummaryState

): Promise<{ summaries: string[] }> => {

const prompt = await mapPrompt.invoke({ context: state.content });

const response = await llm.invoke(prompt);

return { summaries: [String(response.content)] };

};

// Here we define the logic to map out over the documents

// We will use this an edge in the graph

const mapSummaries = (state: typeof OverallState.State) => {

// We will return a list of `Send` objects

// Each `Send` object consists of the name of a node in the graph

// as well as the state to send to that node

return state.contents.map(

(content) => new Send("generateSummary", { content })

);

};

const collectSummaries = async (state: typeof OverallState.State) => {

return {

collapsedSummaries: state.summaries.map(

(summary) => new Document({ pageContent: summary })

),

};

};

async function _reduce(input) {

const prompt = await reducePrompt.invoke({ docs: input });

const response = await llm.invoke(prompt);

return String(response.content);

}

// Add node to collapse summaries

const collapseSummaries = async (state: typeof OverallState.State) => {

const docLists = splitListOfDocs(

state.collapsedSummaries,

lengthFunction,

tokenMax

);

const results = [];

for (const docList of docLists) {

results.push(await collapseDocs(docList, _reduce));

}

return { collapsedSummaries: results };

};

// This represents a conditional edge in the graph that determines

// if we should collapse the summaries or not

async function shouldCollapse(state: typeof OverallState.State) {

let numTokens = await lengthFunction(state.collapsedSummaries);

if (numTokens > tokenMax) {

return "collapseSummaries";

} else {

return "generateFinalSummary";

}

}

// Here we will generate the final summary

const generateFinalSummary = async (state: typeof OverallState.State) => {

const response = await _reduce(state.collapsedSummaries);

return { finalSummary: response };

};

// Construct the graph

const graph = new StateGraph(OverallState)

.addNode("generateSummary", generateSummary)

.addNode("collectSummaries", collectSummaries)

.addNode("collapseSummaries", collapseSummaries)

.addNode("generateFinalSummary", generateFinalSummary)

.addConditionalEdges("__start__", mapSummaries, ["generateSummary"])

.addEdge("generateSummary", "collectSummaries")

.addConditionalEdges("collectSummaries", shouldCollapse, [

"collapseSummaries",

"generateFinalSummary",

])

.addConditionalEdges("collapseSummaries", shouldCollapse, [

"collapseSummaries",

"generateFinalSummary",

])

.addEdge("generateFinalSummary", "__end__");

const app = graph.compile();

LangGraph allows the graph structure to be plotted to help visualize its function:

// Note: tslab only works inside a jupyter notebook. Don't worry about running this code yourself!

import * as tslab from "tslab";

const image = await app.getGraph().drawMermaidPng();

const arrayBuffer = await image.arrayBuffer();

await tslab.display.png(new Uint8Array(arrayBuffer));

When running the application, we can stream the graph to observe its sequence of steps. Below, we will simply print out the name of the step.

Note that because we have a loop in the graph, it can be helpful to specify a recursion_limit on its execution. This will raise a specific error when the specified limit is exceeded.

let finalSummary = null;

for await (const step of await app.stream(

{ contents: splitDocs.map((doc) => doc.pageContent) },

{ recursionLimit: 10 }

)) {

console.log(Object.keys(step));

if (step.hasOwnProperty("generateFinalSummary")) {

finalSummary = step.generateFinalSummary;

}

}

[ 'generateSummary' ]

[ 'generateSummary' ]

[ 'generateSummary' ]

[ 'generateSummary' ]

[ 'generateSummary' ]

[ 'generateSummary' ]

[ 'collectSummaries' ]

[ 'generateFinalSummary' ]

finalSummary;

{

finalSummary: 'The summaries highlight the evolving landscape of large language models (LLMs) and their integration into autonomous agents and various applications. Key themes include:\n' +

'\n' +

'1. **Autonomous Agents and LLMs**: Projects like AutoGPT and GPT-Engineer demonstrate the potential of LLMs as core controllers in autonomous systems, utilizing techniques such as Chain of Thought (CoT) and Tree of Thoughts (ToT) for task management and reasoning. These agents can learn from past actions through self-reflection mechanisms, enhancing their problem-solving capabilities.\n' +

'\n' +

'2. **Supervised Fine-Tuning and Human Feedback**: The importance of human feedback in fine-tuning models is emphasized, with methods like Algorithm Distillation (AD) showing promise in improving model performance while preventing overfitting. The integration of various memory types and external memory systems is suggested to enhance cognitive capabilities.\n' +

'\n' +

'3. **Integration of External Tools**: The incorporation of external tools and APIs significantly extends LLM capabilities, particularly in specialized tasks like maximum inner-product search (MIPS) and domain-specific applications such as ChemCrow for drug discovery. Frameworks like MRKL and HuggingGPT illustrate the potential for LLMs to effectively utilize these tools.\n' +

'\n' +

'4. **Evaluation Discrepancies**: There are notable discrepancies between LLM-based assessments and expert evaluations, indicating that LLMs may struggle with specialized knowledge. This raises concerns about their reliability in critical applications, such as scientific discovery.\n' +

'\n' +

'5. **Limitations of LLMs**: Despite advancements, LLMs face limitations, including finite context lengths, challenges in long-term planning, and difficulties in adapting to unexpected errors. These constraints hinder their robustness compared to human capabilities.\n' +

'\n' +

'Overall, the advancements in LLMs and their applications reveal both their potential and limitations, emphasizing the need for ongoing research and development to enhance their effectiveness in various domains.'

}

In the corresponding LangSmith trace we can see the individual LLM calls, grouped under their respective nodes.

Go deeper

Customization

- As shown above, you can customize the LLMs and prompts for map and reduce stages.

Real-world use-case

- See this blog post case-study on analyzing user interactions (questions about LangChain documentation)!

- The blog post and associated repo also introduce clustering as a means of summarization.

- This opens up another path beyond the

stufformap-reduceapproaches that is worth considering.

Next steps

We encourage you to check out the how-to guides for more detail on:

- Built-in document loaders and text-splitters

- Integrating various combine-document chains into a RAG application

- Incorporating retrieval into a chatbot

and other concepts.