Build a Retrieval Augmented Generation (RAG) App: Part 1

One of the most powerful applications enabled by LLMs is sophisticated question-answering (Q&A) chatbots. These are applications that can answer questions about specific source information. These applications use a technique known as Retrieval Augmented Generation, or RAG.

This is a multi-part tutorial:

- Part 1 (this guide) introduces RAG and walks through a minimal implementation.

- Part 2 extends the implementation to accommodate conversation-style interactions and multi-step retrieval processes.

This tutorial will show how to build a simple Q&A application over a text data source. Along the way we’ll go over a typical Q&A architecture and highlight additional resources for more advanced Q&A techniques. We’ll also see how LangSmith can help us trace and understand our application. LangSmith will become increasingly helpful as our application grows in complexity.

If you’re already familiar with basic retrieval, you might also be interested in this high-level overview of different retrieval techniques.

Note: Here we focus on Q&A for unstructured data. If you are interested for RAG over structured data, check out our tutorial on doing question/answering over SQL data.

Overview

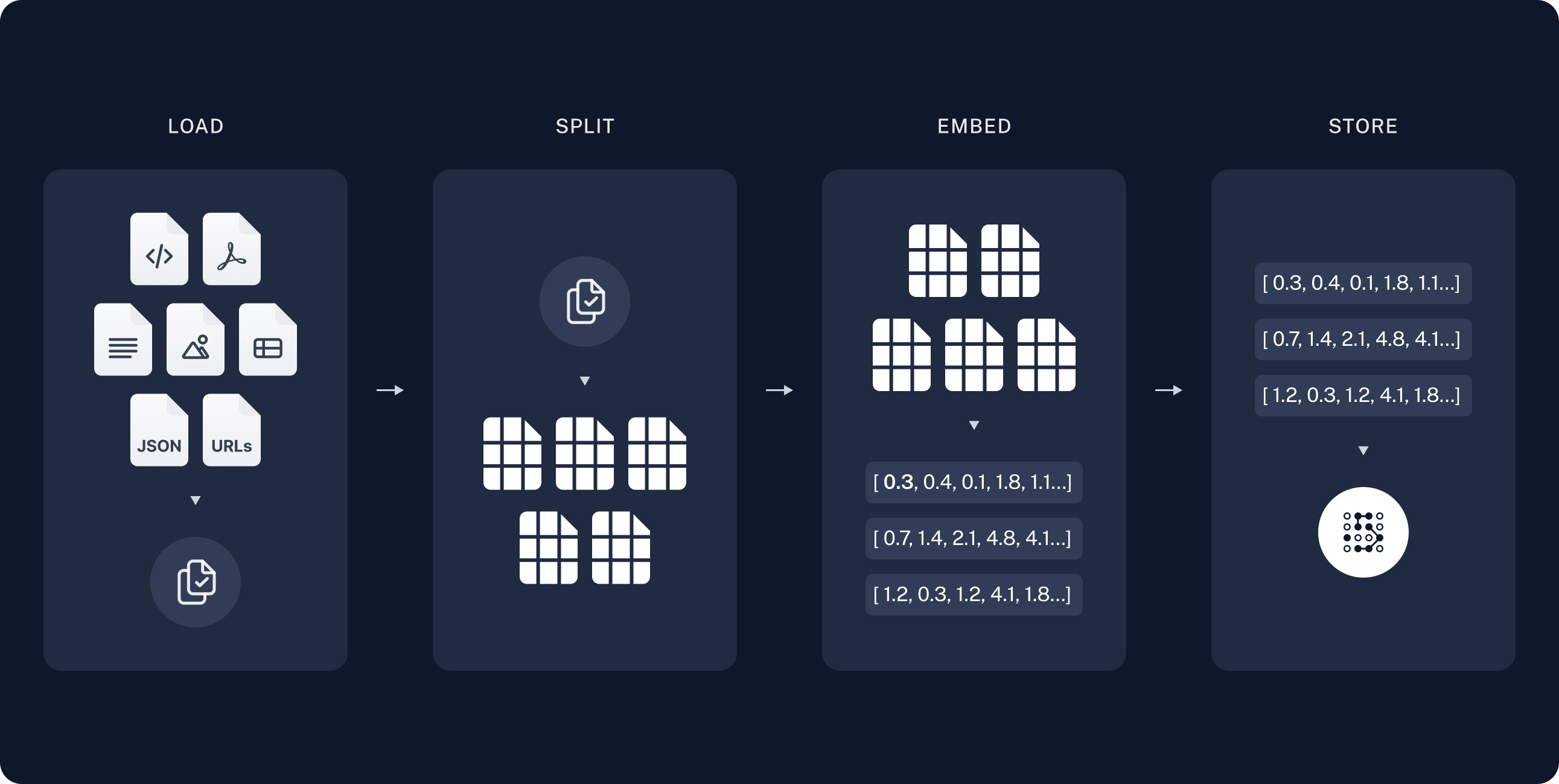

A typical RAG application has two main components:

Indexing: a pipeline for ingesting data from a source and indexing it. This usually happens offline.

Retrieval and generation: the actual RAG chain, which takes the user query at run time and retrieves the relevant data from the index, then passes that to the model.

Note: the indexing portion of this tutorial will largely follow the semantic search tutorial.

The most common full sequence from raw data to answer looks like:

Indexing

- Load: First we need to load our data. This is done with Document Loaders.

- Split: Text splitters break

large

Documentsinto smaller chunks. This is useful both for indexing data and passing it into a model, as large chunks are harder to search over and won’t fit in a model’s finite context window. - Store: We need somewhere to store and index our splits, so that they can be searched over later. This is often done using a VectorStore and Embeddings model.

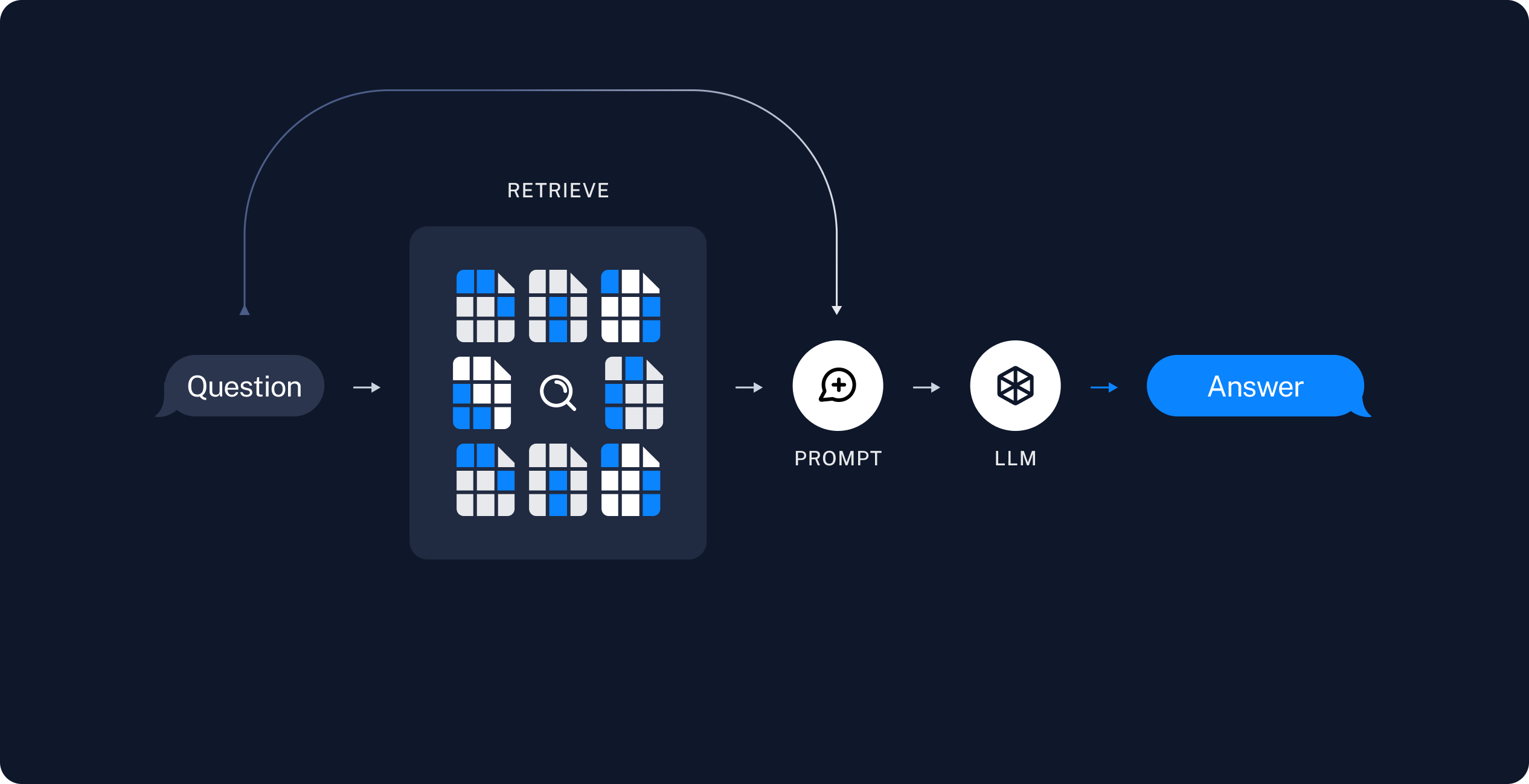

Retrieval and generation

- Retrieve: Given a user input, relevant splits are retrieved from storage using a Retriever.

- Generate: A ChatModel / LLM produces an answer using a prompt that includes both the question with the retrieved data

Once we’ve indexed our data, we will use LangGraph as our orchestration framework to implement the retrieval and generation steps.

Setup

Jupyter Notebook

This and other tutorials are perhaps most conveniently run in a Jupyter notebooks. Going through guides in an interactive environment is a great way to better understand them. See here for instructions on how to install.

Installation

This guide requires the following dependencies:

- npm

- yarn

- pnpm

npm i langchain @langchain/core @langchain/langgraph

yarn add langchain @langchain/core @langchain/langgraph

pnpm add langchain @langchain/core @langchain/langgraph

For more details, see our Installation guide.

LangSmith

Many of the applications you build with LangChain will contain multiple steps with multiple invocations of LLM calls. As these applications get more and more complex, it becomes crucial to be able to inspect what exactly is going on inside your chain or agent. The best way to do this is with LangSmith.

After you sign up at the link above, make sure to set your environment variables to start logging traces:

export LANGSMITH_TRACING="true"

export LANGSMITH_API_KEY="..."

# Reduce tracing latency if you are not in a serverless environment

# export LANGCHAIN_CALLBACKS_BACKGROUND=true

Components

We will need to select three components from LangChain’s suite of integrations.

A chat model:

Pick your chat model:

- Groq

- OpenAI

- Anthropic

- Google Gemini

- FireworksAI

- MistralAI

- VertexAI

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/groq

yarn add @langchain/groq

pnpm add @langchain/groq

Add environment variables

GROQ_API_KEY=your-api-key

Instantiate the model

import { ChatGroq } from "@langchain/groq";

const llm = new ChatGroq({

model: "llama-3.3-70b-versatile",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/openai

yarn add @langchain/openai

pnpm add @langchain/openai

Add environment variables

OPENAI_API_KEY=your-api-key

Instantiate the model

import { ChatOpenAI } from "@langchain/openai";

const llm = new ChatOpenAI({

model: "gpt-4o-mini",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/anthropic

yarn add @langchain/anthropic

pnpm add @langchain/anthropic

Add environment variables

ANTHROPIC_API_KEY=your-api-key

Instantiate the model

import { ChatAnthropic } from "@langchain/anthropic";

const llm = new ChatAnthropic({

model: "claude-3-5-sonnet-20240620",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/google-genai

yarn add @langchain/google-genai

pnpm add @langchain/google-genai

Add environment variables

GOOGLE_API_KEY=your-api-key

Instantiate the model

import { ChatGoogleGenerativeAI } from "@langchain/google-genai";

const llm = new ChatGoogleGenerativeAI({

model: "gemini-2.0-flash",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/community

yarn add @langchain/community

pnpm add @langchain/community

Add environment variables

FIREWORKS_API_KEY=your-api-key

Instantiate the model

import { ChatFireworks } from "@langchain/community/chat_models/fireworks";

const llm = new ChatFireworks({

model: "accounts/fireworks/models/llama-v3p1-70b-instruct",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/mistralai

yarn add @langchain/mistralai

pnpm add @langchain/mistralai

Add environment variables

MISTRAL_API_KEY=your-api-key

Instantiate the model

import { ChatMistralAI } from "@langchain/mistralai";

const llm = new ChatMistralAI({

model: "mistral-large-latest",

temperature: 0

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/google-vertexai

yarn add @langchain/google-vertexai

pnpm add @langchain/google-vertexai

Add environment variables

GOOGLE_APPLICATION_CREDENTIALS=credentials.json

Instantiate the model

import { ChatVertexAI } from "@langchain/google-vertexai";

const llm = new ChatVertexAI({

model: "gemini-1.5-flash",

temperature: 0

});

An embedding model:

Pick your embedding model:

- OpenAI

- Azure

- AWS

- VertexAI

- MistralAI

- Cohere

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/openai

yarn add @langchain/openai

pnpm add @langchain/openai

OPENAI_API_KEY=your-api-key

import { OpenAIEmbeddings } from "@langchain/openai";

const embeddings = new OpenAIEmbeddings({

model: "text-embedding-3-large"

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/openai

yarn add @langchain/openai

pnpm add @langchain/openai

AZURE_OPENAI_API_INSTANCE_NAME=<YOUR_INSTANCE_NAME>

AZURE_OPENAI_API_KEY=<YOUR_KEY>

AZURE_OPENAI_API_VERSION="2024-02-01"

import { AzureOpenAIEmbeddings } from "@langchain/openai";

const embeddings = new AzureOpenAIEmbeddings({

azureOpenAIApiEmbeddingsDeploymentName: "text-embedding-ada-002"

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/aws

yarn add @langchain/aws

pnpm add @langchain/aws

BEDROCK_AWS_REGION=your-region

import { BedrockEmbeddings } from "@langchain/aws";

const embeddings = new BedrockEmbeddings({

model: "amazon.titan-embed-text-v1"

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/google-vertexai

yarn add @langchain/google-vertexai

pnpm add @langchain/google-vertexai

GOOGLE_APPLICATION_CREDENTIALS=credentials.json

import { VertexAIEmbeddings } from "@langchain/google-vertexai";

const embeddings = new VertexAIEmbeddings({

model: "text-embedding-004"

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/mistralai

yarn add @langchain/mistralai

pnpm add @langchain/mistralai

MISTRAL_API_KEY=your-api-key

import { MistralAIEmbeddings } from "@langchain/mistralai";

const embeddings = new MistralAIEmbeddings({

model: "mistral-embed"

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/cohere

yarn add @langchain/cohere

pnpm add @langchain/cohere

COHERE_API_KEY=your-api-key

import { CohereEmbeddings } from "@langchain/cohere";

const embeddings = new CohereEmbeddings({

model: "embed-english-v3.0"

});

And a vector store:

Pick your vector store:

- Memory

- Chroma

- FAISS

- MongoDB

- PGVector

- Pinecone

- Qdrant

Install dependencies

- npm

- yarn

- pnpm

npm i langchain

yarn add langchain

pnpm add langchain

import { MemoryVectorStore } from "langchain/vectorstores/memory";

const vectorStore = new MemoryVectorStore(embeddings);

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/community

yarn add @langchain/community

pnpm add @langchain/community

import { Chroma } from "@langchain/community/vectorstores/chroma";

const vectorStore = new Chroma(embeddings, {

collectionName: "a-test-collection",

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/community

yarn add @langchain/community

pnpm add @langchain/community

import { FaissStore } from "@langchain/community/vectorstores/faiss";

const vectorStore = new FaissStore(embeddings, {});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/mongodb

yarn add @langchain/mongodb

pnpm add @langchain/mongodb

import { MongoDBAtlasVectorSearch } from "@langchain/mongodb"

import { MongoClient } from "mongodb";

const client = new MongoClient(process.env.MONGODB_ATLAS_URI || "");

const collection = client

.db(process.env.MONGODB_ATLAS_DB_NAME)

.collection(process.env.MONGODB_ATLAS_COLLECTION_NAME);

const vectorStore = new MongoDBAtlasVectorSearch(embeddings, {

collection: collection,

indexName: "vector_index",

textKey: "text",

embeddingKey: "embedding",

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/community

yarn add @langchain/community

pnpm add @langchain/community

import { PGVectorStore } from "@langchain/community/vectorstores/pgvector";

const vectorStore = await PGVectorStore.initialize(embeddings, {})

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/pinecone

yarn add @langchain/pinecone

pnpm add @langchain/pinecone

import { PineconeStore } from "@langchain/pinecone";

import { Pinecone as PineconeClient } from "@pinecone-database/pinecone";

const pinecone = new PineconeClient();

const vectorStore = new PineconeStore(embeddings, {

pineconeIndex,

maxConcurrency: 5,

});

Install dependencies

- npm

- yarn

- pnpm

npm i @langchain/qdrant

yarn add @langchain/qdrant

pnpm add @langchain/qdrant

import { QdrantVectorStore } from "@langchain/qdrant";

const vectorStore = await QdrantVectorStore.fromExistingCollection(embeddings, {

url: process.env.QDRANT_URL,

collectionName: "langchainjs-testing",

});

Preview

In this guide we’ll build an app that answers questions about the website’s content. The specific website we will use is the LLM Powered Autonomous Agents blog post by Lilian Weng, which allows us to ask questions about the contents of the post.

We can create a simple indexing pipeline and RAG chain to do this in ~50 lines of code.

import "cheerio";

import { CheerioWebBaseLoader } from "@langchain/community/document_loaders/web/cheerio";

import { Document } from "@langchain/core/documents";

import { ChatPromptTemplate } from "@langchain/core/prompts";

import { pull } from "langchain/hub";

import { Annotation, StateGraph } from "@langchain/langgraph";

import { RecursiveCharacterTextSplitter } from "@langchain/textsplitters";

// Load and chunk contents of blog

const pTagSelector = "p";

const cheerioLoader = new CheerioWebBaseLoader(

"https://lilianweng.github.io/posts/2023-06-23-agent/",

{

selector: pTagSelector

}

);

const docs = await cheerioLoader.load();

const splitter = new RecursiveCharacterTextSplitter({

chunkSize: 1000, chunkOverlap: 200

});

const allSplits = await splitter.splitDocuments(docs);

// Index chunks

await vectorStore.addDocuments(allSplits)

// Define prompt for question-answering

const promptTemplate = await pull<ChatPromptTemplate>("rlm/rag-prompt");

// Define state for application

const InputStateAnnotation = Annotation.Root({

question: Annotation<string>,

});

const StateAnnotation = Annotation.Root({

question: Annotation<string>,

context: Annotation<Document[]>,

answer: Annotation<string>,

});

// Define application steps

const retrieve = async (state: typeof InputStateAnnotation.State) => {

const retrievedDocs = await vectorStore.similaritySearch(state.question)

return { context: retrievedDocs };

};

const generate = async (state: typeof StateAnnotation.State) => {

const docsContent = state.context.map(doc => doc.pageContent).join("\n");

const messages = await promptTemplate.invoke({ question: state.question, context: docsContent });

const response = await llm.invoke(messages);

return { answer: response.content };

};

// Compile application and test

const graph = new StateGraph(StateAnnotation)

.addNode("retrieve", retrieve)

.addNode("generate", generate)

.addEdge("__start__", "retrieve")

.addEdge("retrieve", "generate")

.addEdge("generate", "__end__")

.compile();

let inputs = { question: "What is Task Decomposition?" };

const result = await graph.invoke(inputs);

console.log(result.answer);

Task decomposition is the process of breaking down complex tasks into smaller, more manageable steps. This can be achieved through various methods, including prompting large language models (LLMs) or using task-specific instructions. Techniques like Chain of Thought (CoT) and Tree of Thoughts further enhance this process by structuring reasoning and exploring multiple possibilities at each step.

Check out the LangSmith trace.

Detailed walkthrough

Let’s go through the above code step-by-step to really understand what’s going on.

1. Indexing

This section is an abbreviated version of the content in the semantic search tutorial. If you're comfortable with document loaders, embeddings, and vector stores, feel free to skip to the next section on retrieval and generation.

Loading documents

We need to first load the blog post contents. We can use

DocumentLoaders for this, which are

objects that load in data from a source and return a list of

Documents.

A Document is an object with some pageContent (string) and metadata

(Record<string, any>).

In this case we’ll use the CheerioWebBaseLoader, which uses cheerio to load HTML form web URLs and parse it to text. We can pass custom selectors to the constructor to only parse specific elements:

import "cheerio";

import { CheerioWebBaseLoader } from "@langchain/community/document_loaders/web/cheerio";

const pTagSelector = "p";

const cheerioLoader = new CheerioWebBaseLoader(

"https://lilianweng.github.io/posts/2023-06-23-agent/",

{

selector: pTagSelector,

}

);

const docs = await cheerioLoader.load();

console.assert(docs.length === 1);

console.log(`Total characters: ${docs[0].pageContent.length}`);

Total characters: 22360

console.log(docs[0].pageContent.slice(0, 500));

Building agents with LLM (large language model) as its core controller is a cool concept. Several proof-of-concepts demos, such as AutoGPT, GPT-Engineer and BabyAGI, serve as inspiring examples. The potentiality of LLM extends beyond generating well-written copies, stories, essays and programs; it can be framed as a powerful general problem solver.In a LLM-powered autonomous agent system, LLM functions as the agent’s brain, complemented by several key components:A complicated task usually involv

Go deeper

DocumentLoader: Class that loads data from a source as list of

Documents.

- Docs: Detailed documentation on how to use

- Integrations

- Interface: API reference for the base interface.

Splitting documents

Our loaded document is over 42k characters which is too long to fit into the context window of many models. Even for those models that could fit the full post in their context window, models can struggle to find information in very long inputs.

To handle this we’ll split the Document into chunks for embedding and

vector storage. This should help us retrieve only the most relevant

parts of the blog post at run time.

As in the semantic search tutorial, we use a RecursiveCharacterTextSplitter, which will recursively split the document using common separators like new lines until each chunk is the appropriate size. This is the recommended text splitter for generic text use cases.

import { RecursiveCharacterTextSplitter } from "@langchain/textsplitters";

const splitter = new RecursiveCharacterTextSplitter({

chunkSize: 1000,

chunkOverlap: 200,

});

const allSplits = await splitter.splitDocuments(docs);

console.log(`Split blog post into ${allSplits.length} sub-documents.`);

Split blog post into 29 sub-documents.

Go deeper

TextSplitter: Object that splits a list of Documents into smaller

chunks. Subclass of DocumentTransformers.

Explore

Context-aware splitters, which keep the location (“context”) of each split in the originalDocument:Code (15+ langs)

Interface: API reference for the base interface.

DocumentTransformer: Object that performs a transformation on a list

of Documents.

Docs: Detailed documentation on how to use

DocumentTransformersInterface: API reference for the base interface.

Storing documents

Now we need to index our 66 text chunks so that we can search over them at runtime. Following the semantic search tutorial, our approach is to embed the contents of each document split and insert these embeddings into a vector store. Given an input query, we can then use vector search to retrieve relevant documents.

We can embed and store all of our document splits in a single command using the vector store and embeddings model selected at the start of the tutorial.

await vectorStore.addDocuments(allSplits);

Go deeper

Embeddings: Wrapper around a text embedding model, used for converting

text to embeddings. - Docs: Detailed

documentation on how to use embeddings. -

Integrations: 30+ integrations to

choose from. -

Interface:

API reference for the base interface.

VectorStore: Wrapper around a vector database, used for storing and

querying embeddings. - Docs: Detailed

documentation on how to use vector stores. -

Integrations: 40+ integrations to

choose from. -

Interface:

API reference for the base interface.

This completes the Indexing portion of the pipeline. At this point we have a query-able vector store containing the chunked contents of our blog post. Given a user question, we should ideally be able to return the snippets of the blog post that answer the question.

2. Retrieval and Generation

Now let’s write the actual application logic. We want to create a simple application that takes a user question, searches for documents relevant to that question, passes the retrieved documents and initial question to a model, and returns an answer.

For generation, we will use the chat model selected at the start of the tutorial.

We’ll use a prompt for RAG that is checked into the LangChain prompt hub (here).

import { pull } from "langchain/hub";

import { ChatPromptTemplate } from "@langchain/core/prompts";

const promptTemplate = await pull<ChatPromptTemplate>("rlm/rag-prompt");

// Example:

const example_prompt = await promptTemplate.invoke({

context: "(context goes here)",

question: "(question goes here)",

});

const example_messages = example_prompt.messages;

console.assert(example_messages.length === 1);

example_messages[0].content;

You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.

Question: (question goes here)

Context: (context goes here)

Answer:

We’ll use LangGraph to tie together the retrieval and generation steps into a single application. This will bring a number of benefits:

- We can define our application logic once and automatically support multiple invocation modes, including streaming, async, and batched calls.

- We get streamlined deployments via LangGraph Platform.

- LangSmith will automatically trace the steps of our application together.

- We can easily add key features to our application, including persistence and human-in-the-loop approval, with minimal code changes.

To use LangGraph, we need to define three things:

- The state of our application;

- The nodes of our application (i.e., application steps);

- The “control flow” of our application (e.g., the ordering of the steps).

State:

The state of our application controls what data is input to the application, transferred between steps, and output by the application.

For a simple RAG application, we can just keep track of the input question, retrieved context, and generated answer.

Read more about defining graph states here.

import { Document } from "@langchain/core/documents";

import { Annotation } from "@langchain/langgraph";

const InputStateAnnotation = Annotation.Root({

question: Annotation<string>,

});

const StateAnnotation = Annotation.Root({

question: Annotation<string>,

context: Annotation<Document[]>,

answer: Annotation<string>,

});

Nodes (application steps)

Let’s start with a simple sequence of two steps: retrieval and generation.

import { concat } from "@langchain/core/utils/stream";

const retrieve = async (state: typeof InputStateAnnotation.State) => {

const retrievedDocs = await vectorStore.similaritySearch(state.question);

return { context: retrievedDocs };

};

const generate = async (state: typeof StateAnnotation.State) => {

const docsContent = state.context.map((doc) => doc.pageContent).join("\n");

const messages = await promptTemplate.invoke({

question: state.question,

context: docsContent,

});

const response = await llm.invoke(messages);

return { answer: response.content };

};

Our retrieval step simply runs a similarity search using the input question, and the generation step formats the retrieved context and original question into a prompt for the chat model.

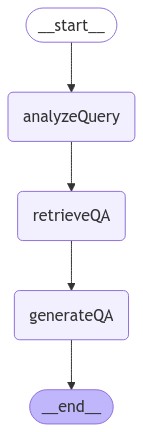

Control flow

Finally, we compile our application into a single graph object. In

this case, we are just connecting the retrieval and generation steps

into a single sequence.

import { StateGraph } from "@langchain/langgraph";

const graph = new StateGraph(StateAnnotation)

.addNode("retrieve", retrieve)

.addNode("generate", generate)

.addEdge("__start__", "retrieve")

.addEdge("retrieve", "generate")

.addEdge("generate", "__end__")

.compile();

LangGraph also comes with built-in utilities for visualizing the control flow of your application:

// Note: tslab only works inside a jupyter notebook. Don't worry about running this code yourself!

import * as tslab from "tslab";

const image = await graph.getGraph().drawMermaidPng();

const arrayBuffer = await image.arrayBuffer();

await tslab.display.png(new Uint8Array(arrayBuffer));

Do I need to use LangGraph?

LangGraph is not required to build a RAG application. Indeed, we can implement the same application logic through invocations of the individual components:

let question = "...";

const retrievedDocs = await vectorStore.similaritySearch(question);

const docsContent = retrievedDocs.map((doc) => doc.pageContent).join("\n");

const messages = await promptTemplate.invoke({

question: question,

context: docsContent,

});

const answer = await llm.invoke(messages);

The benefits of LangGraph include:

- Support for multiple invocation modes: this logic would need to be rewritten if we wanted to stream output tokens, or stream the results of individual steps;

- Automatic support for tracing via LangSmith and deployments via LangGraph Platform;

- Support for persistence, human-in-the-loop, and other features.

Many use-cases demand RAG in a conversational experience, such that a user can receive context-informed answers via a stateful conversation. As we will see in Part 2 of the tutorial, LangGraph’s management and persistence of state simplifies these applications enormously.

Usage

Let’s test our application! LangGraph supports multiple invocation modes, including sync, async, and streaming.

Invoke:

let inputs = { question: "What is Task Decomposition?" };

const result = await graph.invoke(inputs);

console.log(result.context.slice(0, 2));

console.log(`\nAnswer: ${result["answer"]}`);

[

Document {

pageContent: 'hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.Task decomposition can be done (1) by LLM with simple prompting like "Steps for XYZ.\\n1.", "What are the subgoals for achieving XYZ?", (2) by using task-specific instructions; e.g. "Write a story outline." for writing a novel, or (3) with human inputs.Another quite distinct approach, LLM+P (Liu et al. 2023), involves relying on an external classical planner to do long-horizon planning. This approach utilizes the Planning Domain',

metadata: {

source: 'https://lilianweng.github.io/posts/2023-06-23-agent/',

loc: [Object]

},

id: undefined

},

Document {

pageContent: 'Building agents with LLM (large language model) as its core controller is a cool concept. Several proof-of-concepts demos, such as AutoGPT, GPT-Engineer and BabyAGI, serve as inspiring examples. The potentiality of LLM extends beyond generating well-written copies, stories, essays and programs; it can be framed as a powerful general problem solver.In a LLM-powered autonomous agent system, LLM functions as the agent’s brain, complemented by several key components:A complicated task usually involves many steps. An agent needs to know what they are and plan ahead.Chain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.Tree of Thoughts (Yao et al.',

metadata: {

source: 'https://lilianweng.github.io/posts/2023-06-23-agent/',

loc: [Object]

},

id: undefined

}

]

Answer: Task decomposition is the process of breaking down complex tasks into smaller, more manageable steps. This can be achieved through various methods, including prompting large language models (LLMs) to outline steps or using task-specific instructions. Techniques like Chain of Thought (CoT) and Tree of Thoughts further enhance this process by structuring reasoning and exploring multiple possibilities at each step.

Stream steps:

console.log(inputs);

console.log("\n====\n");

for await (const chunk of await graph.stream(inputs, {

streamMode: "updates",

})) {

console.log(chunk);

console.log("\n====\n");

}

{ question: 'What is Task Decomposition?' }

====

{

retrieve: { context: [ [Document], [Document], [Document], [Document] ] }

}

====

{

generate: {

answer: 'Task decomposition is the process of breaking down complex tasks into smaller, more manageable steps. This can be achieved through various methods, including prompting large language models (LLMs) or using task-specific instructions. Techniques like Chain of Thought (CoT) and Tree of Thoughts further enhance this process by structuring reasoning and exploring multiple possibilities at each step.'

}

}

====

Stream tokens (requires @langchain/core >=

0.3.24 and @langchain/langgraph >= 0.2.34 with above implementation):

const stream = await graph.stream(inputs, { streamMode: "messages" });

for await (const [message, _metadata] of stream) {

process.stdout.write(message.content + "|");

}

|Task| decomposition| is| the| process| of| breaking| down| complex| tasks| into| smaller|,| more| manageable| steps|.| This| can| be| achieved| through| various| methods|,| including| prompting| large| language| models| (|LL|Ms|)| to| outline| steps| or| using| task|-specific| instructions|.| Techniques| like| Chain| of| Thought| (|Co|T|)| and| Tree| of| Thoughts| further| enhance| this| process| by| struct|uring| reasoning| and| exploring| multiple| possibilities| at| each| step|.||

Streaming tokens with the current implementation, using .invoke in the generate step, requires @langchain/core >= 0.3.24 and @langchain/langgraph >= 0.2.34. See details here.

Returning sources

Note that by storing the retrieved context in the state of the graph, we

recover sources for the model’s generated answer in the "context"

field of the state. See this guide on

returning sources for more detail.

Go deeper

Chat models take in a sequence of messages and return a message.

- Docs

- Integrations: 25+ integrations to choose from.

Customizing the prompt

As shown above, we can load prompts (e.g., this RAG prompt) from the prompt hub. The prompt can also be easily customized. For example:

const template = `Use the following pieces of context to answer the question at the end.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

Use three sentences maximum and keep the answer as concise as possible.

Always say "thanks for asking!" at the end of the answer.

{context}

Question: {question}

Helpful Answer:`;

const promptTemplateCustom = ChatPromptTemplate.fromMessages([

["user", template],

]);

Query analysis

So far, we are executing the retrieval using the raw input query. However, there are some advantages to allowing a model to generate the query for retrieval purposes. For example:

- In addition to semantic search, we can build in structured filters (e.g., “Find documents since the year 2020.”);

- The model can rewrite user queries, which may be multifaceted or include irrelevant language, into more effective search queries.

Query analysis employs models to transform or construct optimized search queries from raw user input. We can easily incorporate a query analysis step into our application. For illustrative purposes, let’s add some metadata to the documents in our vector store. We will add some (contrived) sections to the document which we can filter on later.

const totalDocuments = allSplits.length;

const third = Math.floor(totalDocuments / 3);

allSplits.forEach((document, i) => {

if (i < third) {

document.metadata["section"] = "beginning";

} else if (i < 2 * third) {

document.metadata["section"] = "middle";

} else {

document.metadata["section"] = "end";

}

});

allSplits[0].metadata;

{

source: 'https://lilianweng.github.io/posts/2023-06-23-agent/',

loc: { lines: { from: 1, to: 1 } },

section: 'beginning'

}

We will need to update the documents in our vector store. We will use a simple MemoryVectorStore for this, as we will use some of its specific features (i.e., metadata filtering). Refer to the vector store integration documentation for relevant features of your chosen vector store.

import { MemoryVectorStore } from "langchain/vectorstores/memory";

const vectorStoreQA = new MemoryVectorStore(embeddings);

await vectorStoreQA.addDocuments(allSplits);

Let’s next define a schema for our search query. We will use structured output for this purpose. Here we define a query as containing a string query and a document section (either “beginning”, “middle”, or “end”), but this can be defined however you like.

import { z } from "zod";

const searchSchema = z.object({

query: z.string().describe("Search query to run."),

section: z.enum(["beginning", "middle", "end"]).describe("Section to query."),

});

const structuredLlm = llm.withStructuredOutput(searchSchema);

Finally, we add a step to our LangGraph application to generate a query from the user’s raw input:

const StateAnnotationQA = Annotation.Root({

question: Annotation<string>,

search: Annotation<z.infer<typeof searchSchema>>,

context: Annotation<Document[]>,

answer: Annotation<string>,

});

const analyzeQuery = async (state: typeof InputStateAnnotation.State) => {

const result = await structuredLlm.invoke(state.question);

return { search: result };

};

const retrieveQA = async (state: typeof StateAnnotationQA.State) => {

const filter = (doc) => doc.metadata.section === state.search.section;

const retrievedDocs = await vectorStore.similaritySearch(

state.search.query,

2,

filter

);

return { context: retrievedDocs };

};

const generateQA = async (state: typeof StateAnnotationQA.State) => {

const docsContent = state.context.map((doc) => doc.pageContent).join("\n");

const messages = await promptTemplate.invoke({

question: state.question,

context: docsContent,

});

const response = await llm.invoke(messages);

return { answer: response.content };

};

const graphQA = new StateGraph(StateAnnotationQA)

.addNode("analyzeQuery", analyzeQuery)

.addNode("retrieveQA", retrieveQA)

.addNode("generateQA", generateQA)

.addEdge("__start__", "analyzeQuery")

.addEdge("analyzeQuery", "retrieveQA")

.addEdge("retrieveQA", "generateQA")

.addEdge("generateQA", "__end__")

.compile();

// Note: tslab only works inside a jupyter notebook. Don't worry about running this code yourself!

import * as tslab from "tslab";

const image = await graphQA.getGraph().drawMermaidPng();

const arrayBuffer = await image.arrayBuffer();

await tslab.display.png(new Uint8Array(arrayBuffer));

We can test our implementation by specifically asking for context from the end of the post. Note that the model includes different information in its answer.

let inputsQA = {

question: "What does the end of the post say about Task Decomposition?",

};

console.log(inputsQA);

console.log("\n====\n");

for await (const chunk of await graphQA.stream(inputsQA, {

streamMode: "updates",

})) {

console.log(chunk);

console.log("\n====\n");

}

{

question: 'What does the end of the post say about Task Decomposition?'

}

====

{

analyzeQuery: { search: { query: 'Task Decomposition', section: 'end' } }

}

====

{ retrieveQA: { context: [ [Document], [Document] ] } }

====

{

generateQA: {

answer: 'The end of the post emphasizes the importance of task decomposition by outlining a structured approach to organizing code into separate files and functions. It highlights the need for clarity and compatibility among different components, ensuring that each part of the architecture is well-defined and functional. This methodical breakdown aids in maintaining best practices and enhances code readability and manageability.'

}

}

====

In both the streamed steps and the LangSmith trace, we can now observe the structured query that was fed into the retrieval step.

Query Analysis is a rich problem with a wide range of approaches. Refer to the how-to guides for more examples.

Next steps

We’ve covered the steps to build a basic Q&A app over data:

- Loading data with a Document Loader

- Chunking the indexed data with a Text Splitter to make it more easily usable by a model

- Embedding the data and storing the data in a vectorstore

- Retrieving the previously stored chunks in response to incoming questions

- Generating an answer using the retrieved chunks as context.

In Part 2 of the tutorial, we will extend the implementation here to accommodate conversation-style interactions and multi-step retrieval processes.

Further reading:

- Return sources: Learn how to return source documents

- Streaming: Learn how to stream outputs and intermediate steps

- Add chat history: Learn how to add chat history to your app

- Retrieval conceptual guide: A high-level overview of specific retrieval techniques